Detection of Discrimination, Bias, and Microaggressions in Text

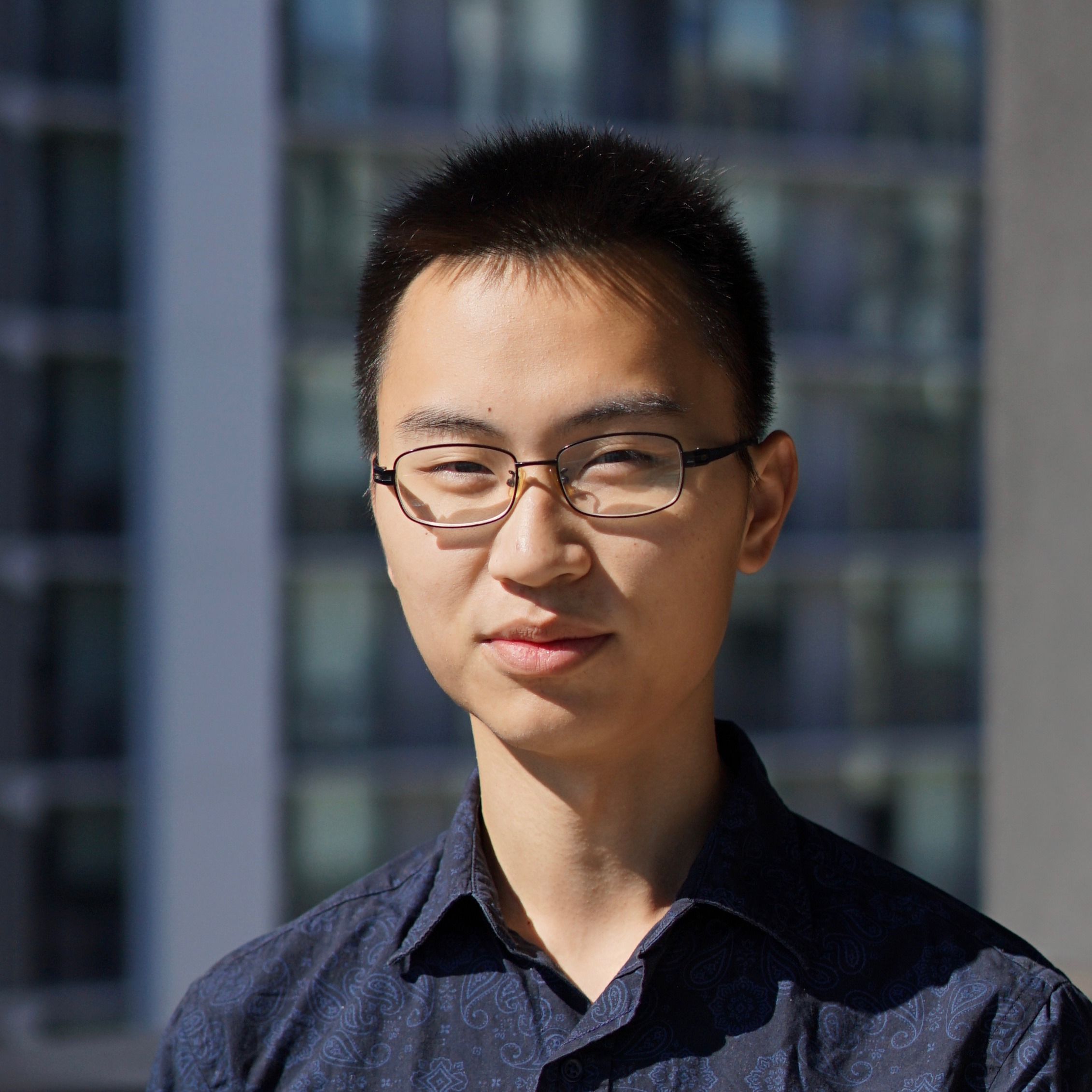

While much work in NLP has focused on detecting toxic language like hate speech, veiled offensive language remains difficult to identify. This includes comments that deliberately avoid known toxic lexicons and manifestations of implicit bias, such as microaggressions, condescending language, and dehumanization. Our lab has developed and continues to build data sets and methods for detecting this type of veiled hostility in domains like newspaper articles and social media comments.

Voigt et al. (2018) presents our data set of 2nd-person social media comments, and Breitfeller et al. (2019) describes our methodology for collecting annotated microaggressions. Given the difficulty of collecting direct annotations for subtle and implicit biases, we have additionally worked to develop an unsupervised approach to surfacing implicit gender bias (Field and Tsvetkov, 2020). In this project, we aim to identify systemic differences in comments addressed towards men and women without relying on explicit annotations. Our primary methodology involves controlling for confounds: features that may be correlated with gender, but are not indicative of bias. To achieve this, we draw frameworks and matching methodology from causality literature, as well as reduce the influence of confounds through an adversarial training objective. Our model is able to surface comments likely to contain bias, even in out-of-domain data.

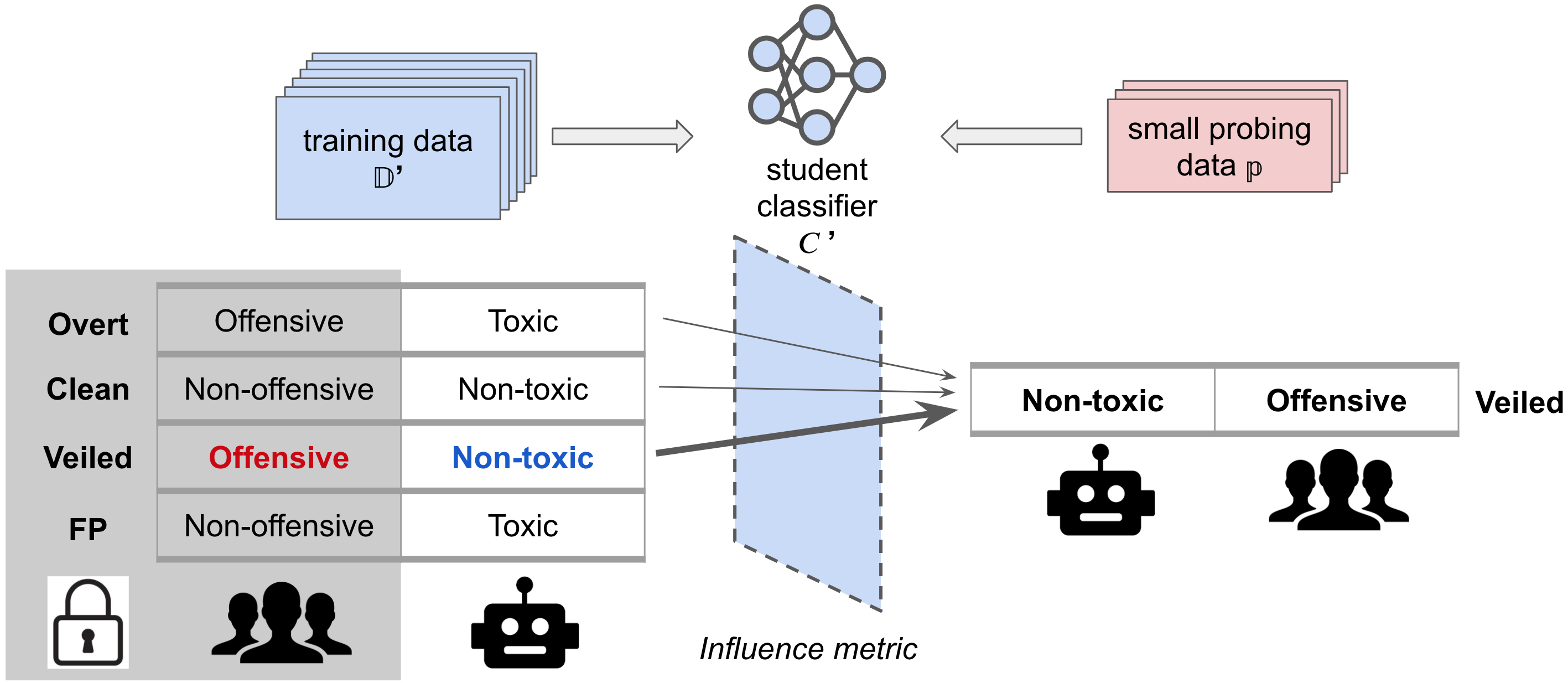

In Han and Tsvetkov (2020), we additionally aim to improve the ability of existing toxic speech detectors to uncover veiled toxicity without requiring large labeled corpora, which are difficult and expensive to collect. Our framework uses a handful of probing examples to surface orders of magnitude more disguised offenses. We augment a toxic speech detector’s training data with these discovered offensive examples, thereby making it more robust to veiled toxicity while preserving its utility in detecting overt toxicity.

People

Anjalie Field

Xiaochuang Han

Chan Young Park

Related Papers

- Unsupervised Discovery of Implicit Gender Bias, (Field and Tsvetkov, 2020), EMNLP

- Fortifying Toxic Speech Detectors Against Veiled Toxicity, (Han and Tsvetkov, 2020), EMNLP

- Multilingual Contextual Affective Analysis of LGBT People Portrayals in Wikipedia, (Park et al., 2021), ICWSM

- A framework for the computational linguistic analysis of dehumanization, (Mendelsohn et al., 2020), Frontiers in Artificial Intelligence

- Demoting Racial Bias in Hate Speech Detection, (Xia et al., 2020), SocialNLP

- Stress and Burnout in Open Source: Toward Finding, Understanding, and Mitigating Unhealthy Interactions, (Raman et al., 2020), International Conference on Software Engineering -- New Ideas Track (ICSE-NIER)

- Finding Microaggressions in the Wild: A Case for Locating Elusive Phenomena in Social Media Posts, (Breitfeller et al., 2019), EMNLP

- Contextual Affective Analysis: A Case Study of People Portrayals in Online #MeToo Stories, (Field et al., 2019), ICWSM

- RtGender: A corpus for studying differential responses to gender, (Voigt et al., 2018), LREC'18