Politics, Propaganda, and Polarization in Online Media

Online platforms have allowed news and opinions to spread faster than ever, but with these advances come concerns about credibility and bias. Actors can use social media or online newspapers to manipulate public opinion by rapidly spreading information that is one-sided, misleading, or even completely false. Our lab investigates biased or politically motivated content on a variety of online platforms in global settings. Unlike research teams that focus on social network features or other forms of structured data, we take an NLP-centric approach with deep analyses of text content.

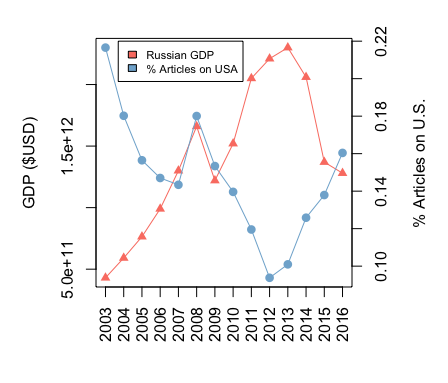

Our work on agenda-setting (what topics are reported) and framing (how topics are reported) in Russian newspapers considers how these concepts can be used to manipulate public opinion by combining NLP, political science, and economics (Field et al., 2018). We have also examined polarizing messaging posted by Indian and Pakistani politicians about a terrorist attack in India (Tyagi et al., 2020).

Some of our ongoing projects include an investigation of emotions in 2020 tweets about the BlackLivesMatter movement. Specifically, we are developing a state-of-the-art model for detecting emotions in tweets, and analyzing how emotion content varies across users and types of content. In a separate project, we are performing a phylogeny analysis of small text messages posted on social media platforms in order to help identify false information spread on social networks. Unlike prior work, we are taking a data-driven approach that uses recent advances of deep neural networks in NLP and pattern recognition. Our work can aid online platforms in improving the reliability and transparency of published data, verifying the accuracy of posted information, and provide insight into the spread and influence of online posts.

People

Anjalie Field

Chan Young Park

Antonio Theophilo

Related Papers

- A Computational Analysis of Polarization onIndian and Pakistani Social Media, (Tyagi et al., 2020), SocInfo

- LTIatCMU at SemEval-2020 Task 11: Incorporating Multi-Level Features for Multi-Granular Propaganda Span Identification, (Khosla et al., 2020), SemEval

- Framing and Agenda-setting in Russian News: a Computational Analysis of Intricate Political Strategies, (Field et al., 2018), EMNLP